This is my second post on the alignment of Thanksgiving and Hanukkah. Go back and read the first post, if you haven’t done so.

When compared to the Julian or Gregorian calendar, the Hebrew calendar is a different animal entirely. First of all, it is not a solar calendar, but is rather a lunisolar calendar. This means that while the years are kept in alignment with the solar year, the months are reckoned according to the motion of the moon. In ancient days, the start of the month was tied to the sighting of the new moon. Eventually, the Jewish people (and more specifically, the rabbis) realized that it would be better for the calendar to rely more on mathematical principles. Credit typically goes to Hillel II, who lived in the 300s CE. In the description that follows, I will be using Dershowitz and Reingold’s Calendrical Calculations as my primary source, with assistance from Tracy Rich’s Jew FAQ page.

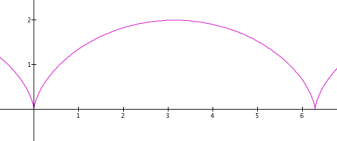

The typical Jewish year contains 12 months of 29 or 30 days each, and is often 354 days long. (See how I worded that? It matters.) Clearly, this is significantly shorter than the solar year, so some adjustments are necessary. Specifically, there is a leap year for 7 of every 19 years. But instead of adding a leap day, the Hebrew calendar goes right ahead and adds an entire month (Adar II), which adds 30 days to the length of the year. Mathematically, you can figure out if year y is a leap year by calculating (7y+1) mod 19—if the answer is < 7, then y is a leap year. In the current year, 5774, the calculation is 7*5774+1 = 40419 = 6 (mod 19), so it’s a leap year. With just this fact, the average length of the year appears to be 365.053—about 4 1/2 hours fast. At a minimum, the leap months explain how Jewish holidays move through the Gregorian calendar: since the typical year is 354 days, a holiday will move earlier and earlier each year, until a leap month occurs, at which point it will snap back to a later date. (Next year, Hanukkah will be on 17 December.)

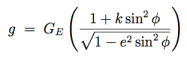

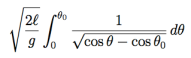

But it’s not as simple as all that. Owing to the lunar origins of the Hebrew calendar, the beginning of the new year is determined by the occurrence of the new moon (called the molad) in the month of Tishrei (the Jewish New Year, Rosh Hashanah, is on 1 Tishrei). Owing to the calendar reforms of Hillel II, this has become a purely mathematical process. Basically, you take a previously calculated molad and use the average length of the moon’s cycle to calculate the molad for any future month. Adding a wrinkle to this calculation is the fact that the ancient Jews used a timekeeping system in which the day had 24 hours and each hour was divided into 1080 “parts”. (So, one part = 3 1/3 seconds.) In this system, the average length of a lunar cycle is estimated as 29d 12h 793p. While this estimate is many centuries old, it is incredibly accurate—the average synodic period of the moon is 29d 12h 792.86688p, a difference of less than half a second.

Once the molad of Tishrei has been calculated, there are 4 postponement rules, called the dechiyot, which add another layer to the calculation:

- If the molad occurs late in the day (12pm or 6pm depending on your source) Rosh Hashanah is postponed by a day.

- Rosh Hashanah cannot occur on a Sunday, Wednesday, or Friday. If so, it gets postponed by a day.

- The year is only allowed to be 353-355 days long (or 383-385 days in a leap year). The calculations for year y can have the effect of making year y+1 too long, in which case Rosh Hashanah in year y will get postponed to avoid this problem.

- If year y-1 is a leap year, and Rosh Hashanah for year y is on a Monday, the year y-1 may be too short. Rosh Hashanah for year y needs to get postponed a day.

As someone who’s relatively new to the Hebrew calendar, all of this was very confusing to me. For one thing, it’s not clear that rules 3 and 4 will really keep the length of the year in the correct range. For another, it’s not clear what you’d do with the “extra” days that are inserted or removed. Here’s how I think of it: the years in the Hebrew calendar don’t live in arithmetical isolation, but are designed to be elastic. You can stretch or shrink adjacent years by a day or two so that the start of each year begins on an allowable day. When a year needs to be stretched, a leap day is included at the end of the month of Cheshvan. When a year needs to be shrunk, an “un-leap” day is removed from the end of Kislev.

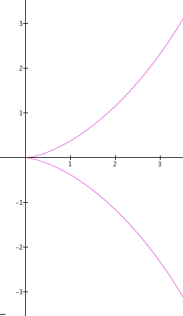

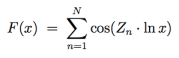

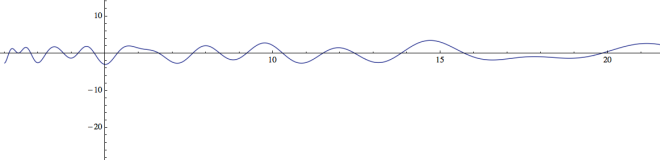

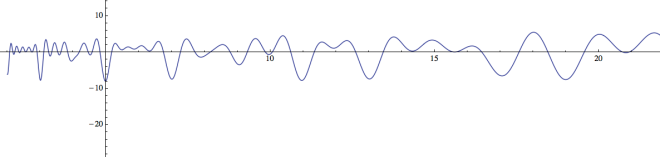

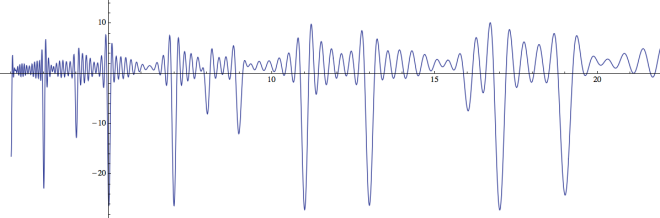

Now here’s the question my mathematician’s soul wants to answer: How long is the period for the Hebrew calendar? This might seem an impossible question in light of all the postponement rules, but it turns out that each block of 19 years will have exactly the same length: 6939d 16h 595p, or 991 weeks with a remainder of 69,715 parts. As with the Julian calendar, the days of the week don’t match from block to block, so we need to use the length of a week (181,440 parts) and find the least common multiple. Using parts as the basic unit of measurement, we have:

lcm(69715, 181440) = 2,529,817,920 parts ≈ 689,472 years.

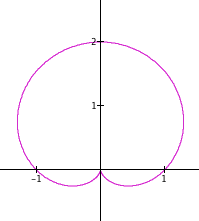

Wow! We can also calculate the “combined period” of the Hebrew and Gregorian calendars, to see how frequently they will align exactly. Writing the average year lengths as fractions, the calculation is:

lcm(689472*(365+24311/98496), 400*(365+97/400)) = 5,255,890,855,047 days = 14,390,140,400 Gregorian years = 14,389, 970,112 Hebrew years.

For comparison, the age of the universe is about 13,730,000,000 years. So while particular dates can align more frequently (for instance, Thanksgivukkah last occurred in 1888), the calendars as a whole won’t ever realign again. However, I suppose that claim depends on your view of the expansion of the universe!